Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

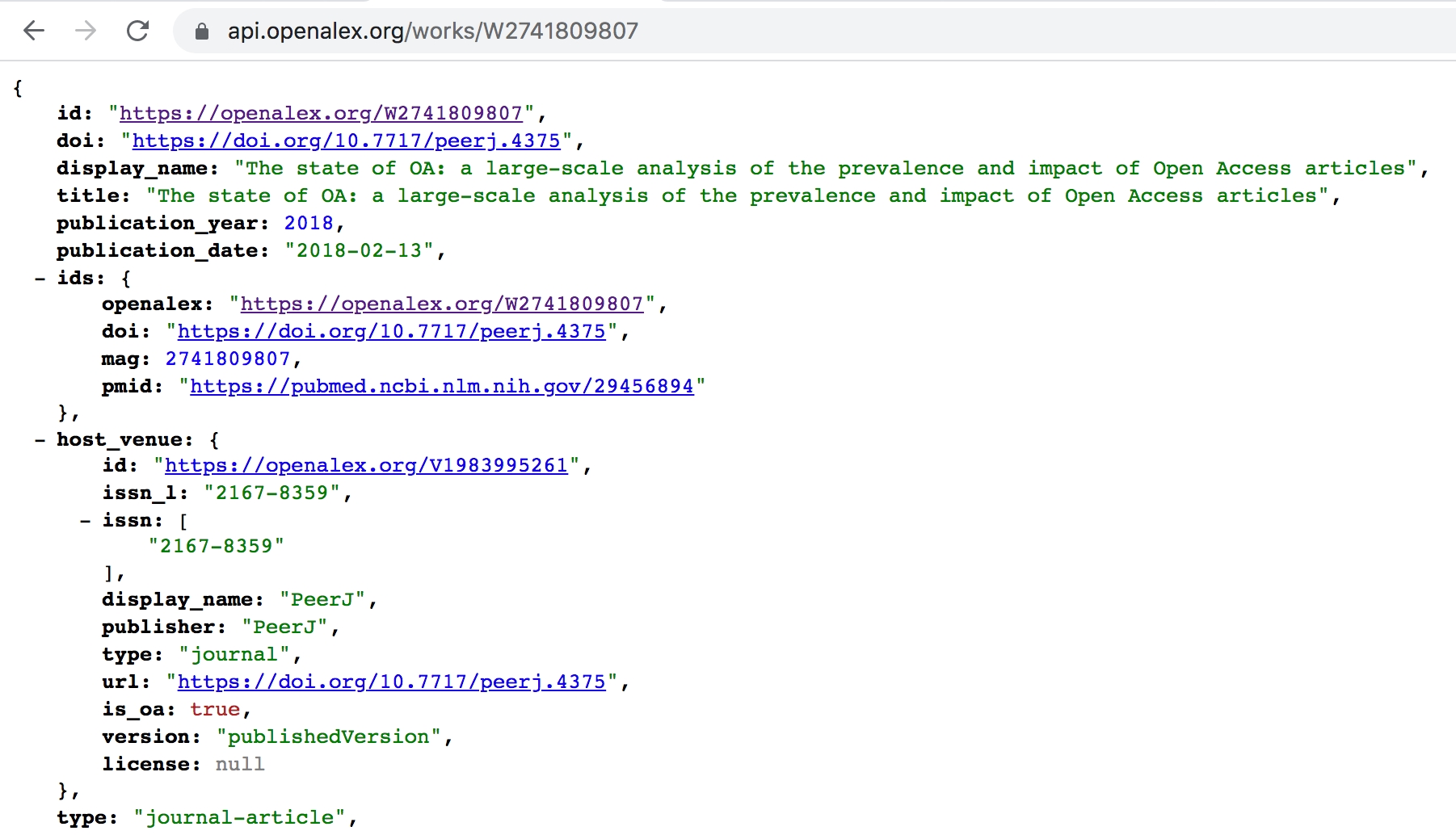

There's a lot of useful data inside a work. When you use the API to get a single work or lists of works, this is what's returned.

abstract_inverted_indexObject: The abstract of the work, as an inverted index, which encodes information about the abstract's words and their positions within the text. Like Microsoft Academic Graph, OpenAlex doesn't include plaintext abstracts due to legal constraints.

Newer works are more likely to have an abstract inverted index. For example, over 60% of works in 2022 have abstract data, compared to 45% for works older than 2000. Full chart is below:

alternate_host_venues (deprecated)The host_venue and alternate_host_venues properties have been deprecated in favor of primary_location and locations. The attributes host_venue and alternate_host_venues are no longer available in the Work object, and trying to access them in filters or group-bys will return an error.

authorshipsList: List of Authorship objects, each representing an author and their institution. Limited to the first 100 authors to maintain API performance.

For more information, see the Authorship object page.

apc_listObject: Information about this work's APC (article processing charge). The object contains:

value: Integer

currency: String

provenance: String — the source of this data. Currently the only value is “doaj” (DOAJ)

value_usd: Integer — the APC converted into USD

This value is the APC list price–the price as listed by the journal’s publisher. That’s not always the price actually paid, because publishers may offer various discounts to authors. Unfortunately we don’t always know this discounted price, but when we do you can find it in apc_paid.

Currently our only source for this data is DOAJ, and so doaj is the only value for apc_list.provenance, but we’ll add other sources over time.

We currently don’t have information on the list price for hybrid journals (toll-access journals that also provide an open-access option), but we will add this at some point. We do have apc_paid information for hybrid OA works occasionally.

You can use this attribute to find works published in Diamond open access journals by looking at works where apc_list.value is zero. See open_access.oa_status for more info.

apc_paidObject: Information about the paid APC (article processing charge) for this work. The object contains:

value: Integer

currency: String

provenance: String — currently either openapc or doaj, but more will be added; see below for details.

value_usd: Integer — the APC converted into USD

You can find the listed APC price (when we know it) for a given work using apc_list. However, authors don’t always pay the listed price; often they get a discounted price from publishers. So it’s useful to know the APC actually paid by authors, as distinct from the list price. This is our effort to provide this.

Our best source for the actually paid price is the OpenAPC project. Where available, we use that data, and so apc_paid.provenance is openapc. Where OpenAPC data is unavailable (and unfortunately this is common) we make our best guess by assuming the author paid the APC list price, and apc_paid.provenance will be set to wherever we got the list price from.

best_oa_locationObject: A Location object with the best available open access location for this work.

We score open locations to determine which is best using these factors:

Must have is_oa: true

type_:_ "publisher" is better than "repository".

version: "publishedVersion" is better than "acceptedVersion", which is better than "submittedVersion".

pdf_url: A location with a direct PDF link is better than one without.

repository rankings: Some major repositories like PubMed Central and arXiv are ranked above others.

biblioObject: Old-timey bibliographic info for this work. This is mostly useful only in citation/reference contexts. These are all strings because sometimes you'll get fun values like "Spring" and "Inside cover."

volume (String)

issue (String)

first_page (String)

last_page (String)

citation_normalized_percentileObject: The percentile of this work's citation count normalized by work type, publication year, and subfield. This field represents the same information as the FWCI expressed as a percentile. Learn more in the reference article: Field Weighted Citation Impact (FWCI).

cited_by_api_urlString: A URL that uses the cites filter to display a list of works that cite this work. This is a way to expand cited_by_count into an actual list of works.

cited_by_countInteger: The number of citations to this work. These are the times that other works have cited this work: Other works ➞ This work.

conceptsList: List of dehydrated Concept objects.

Each Concept object in the list also has one additional property:

score (Float): The strength of the connection between the work and this concept (higher is stronger). This number is produced by AWS Sagemaker, in the last layer of the machine learning model that assigns concepts.

Concepts with a score of at least 0.3 are assigned to the work. However, ancestors of an assigned concept are also added to the work, even if the ancestor scores are below 0.3.

Because ancestor concepts are assigned to works, you may see concepts in works with very low scores, even some zero scores.

corresponding_author_idsList: OpenAlex IDs of any authors for which authorships.is_corresponding is true.

corresponding_institution_idsList: OpenAlex IDs of any institutions found within an authorship for which authorships.is_corresponding is true.

countries_distinct_countInteger: Number of distinct country_codes among the authorships for this work.

counts_by_yearList: Works.cited_by_count for each of the last ten years, binned by year. To put it another way: each year, you can see how many times this work was cited.

Any citations older than ten years old aren't included. Years with zero citations have been removed so you will need to add those in if you need them.

created_dateString: The date this Work object was created in the OpenAlex dataset, expressed as an ISO 8601 date string.

display_nameString: Exactly the same as Work.title. It's useful for Works to include a display_name property, since all the other entities have one.

doiString: The DOI for the work. This is the Canonical External ID for works.

Occasionally, a work has more than one DOI--for example, there might be one DOI for a preprint version hosted on bioRxiv, and another DOI for the published version. However, this field always has just one DOI, the DOI for the published work.

fulltext_originString: If a work's full text is searchable in OpenAlex (has_fulltext is true), this tells you how we got the text. This will be one of:

pdf: We used Grobid to get the text from an open-access PDF.

ngrams: Full text search is enabled using N-grams obtained from the Internet Archive.

This attribute is only available for works with has_fulltext:true.

fwciFloat: The Field-weighted Citation Impact (FWCI), calculated for a work as the ratio of citations received / citations expected in the year of publications and three following years. Learn more in the reference article: Field Weighted Citation Impact (FWCI).

grantsList: List of grant objects, which include the Funder and the award ID, if available. Our grants data comes from Crossref, and is currently fairly limited.

has_fulltextBoolean: Set to true if the work's full text is searchable in OpenAlex. This does not necessarily mean that the full text is available to you, dear reader; rather, it means that we have indexed the full text and can use it to help power searches. If you are trying to find the full text for yourself, try looking in open_access.oa_url.

We get access to the full text in one of two ways: either using an open-access PDF, or using N-grams obtained from the Internet Archive. You can learn where a work's full text came from at fulltext_origin.

host_venue (deprecated)The host_venue and alternate_host_venues properties have been deprecated in favor of primary_location and locations. The attributes host_venue and alternate_host_venues are no longer available in the Work object, and trying to access them in filters or group-bys will return an error.

idString: The OpenAlex ID for this work.

idsObject: All the external identifiers that we know about for this work. IDs are expressed as URIs whenever possible. Possible ID types:

mag (Integer: the Microsoft Academic Graph ID)

openalex (String: The OpenAlex ID. Same as Work.id)

pmid (String: The Pubmed Identifier)

pmcid (String: the Pubmed Central identifier)

Most works are missing one or more ID types (either because we don't know the ID, or because it was never assigned). Keys for null IDs are not displayed.

indexed_inList: The sources this work is indexed in. Possible values: arxiv, crossref, doaj, pubmed.

institutions_distinct_countInteger: Number of distinct institutions among the authorships for this work.

is_paratextBoolean: True if we think this work is paratext.

In our context, paratext is stuff that's in a scholarly venue (like a journal) but is about the venue rather than a scholarly work properly speaking. Some examples and nonexamples:

yep it's paratext: front cover, back cover, table of contents, editorial board listing, issue information, masthead.

no, not paratext: research paper, dataset, letters to the editor, figures

Turns out there is a lot of paratext in registries like Crossref. That's not a bad thing... but we've found that it's good to have a way to filter it out.

We determine is_paratext algorithmically using title heuristics.

is_retractedBoolean: True if we know this work has been retracted.

We identify works that have been retracted using the public Retraction Watch database, a public resource made possible by a partnership between Crossref and The Center for Scientific Integrity.

keywordsList of objects: Short phrases identified based on works' Topics. For background on how Keywords are identified, see the Keywords page at OpenAlex help pages.

The score for each keyword represents the similarity score of that keyword to the title and abstract text of the work.

We provide up to 5 keywords per work, for all keywords with scores above a certain threshold.

languageString: The language of the work in ISO 639-1 format. The language is automatically detected using the information we have about the work. We use the langdetect software library on the words in the work's abstract, or the title if we do not have the abstract. The source code for this procedure is here. Keep in mind that this method is not perfect, and that in some cases the language of the title or abstract could be different from the body of the work.

A few things to keep in mind about this:

We don't always assign a language if we do not have enough words available to accurately guess.

We report the language of the metadata, not the full text. For example, if a work is in French, but the title and abstract are in English, we report the language as English.

In some cases, abstracts are in two different languages. Unfortunately, when this happens, what we report will not be accurate.

licenseString: The license applied to this work at this host. Most toll-access works don't have an explicit license (they're under "all rights reserved" copyright), so this field generally has content only if is_oa is true.

locationsList: A list of Location objects describing all unique places where this work lives.

locations_countInteger: Number of locations for this work.

meshList: List of MeSH tag objects. Only works found in PubMed have MeSH tags; for all other works, this is an empty list.

open_accessObject: Information about the access status of this work, as an OpenAccess object.

primary_locationObject: A Location object with the primary location of this work.

The primary_location is where you can find the best (closest to the version of record) copy of this work. For a peer-reviewed journal article, this would be a full text published version, hosted by the publisher at the article's DOI URL.

primary_topicObject

The top ranked Topic for this work. This is the same as the first item in Work.topics.

publication_dateString: The day when this work was published, formatted as an ISO 8601 date.

Where different publication dates exist, we usually select the earliest available date of electronic publication.

This date applies to the version found at Work.url. The other versions, found in Work.locations, may have been published at different (earlier) dates.

publication_yearInteger: The year this work was published.

This year applies to the version found at Work.url. The other versions, found in Work.locations, may have been published in different (earlier) years.

referenced_worksList: OpenAlex IDs for works that this work cites. These are citations that go from this work out to another work: This work ➞ Other works.

related_worksList: OpenAlex IDs for works related to this work. Related works are computed algorithmically; the algorithm finds recent papers with the most concepts in common with the current paper.

sustainable_development_goalsList: List of objects

The United Nations' 17 Sustainable Development Goals are a collection of goals at the heart of a global "shared blueprint for peace and prosperity for people and the planet." We use a machine learning model to tag works with their relevance to these goals based on our OpenAlex SDG Classifier, an mBERT machine learning model developed by the Aurora Universities Network. The score represents the model's predicted probability of the work's relevance for a particular goal.

We display all of the SDGs with a prediction score higher than 0.4.

topicsList: List of objects

The top ranked Topics for this work. We provide up to 3 topics per work.

titleString: The title of this work.

This is exactly the same as Work.display_name. We include both attributes with the same information because we want all entities to have a display_name, but there's a longstanding tradition of calling this the "title," so we figured you'll be expecting works to have it as a property.

typeString: The type of the work.

You can see all of the different types along with their counts in the OpenAlex API here: https://api.openalex.org/works?group_by=type.

Most works are type article. This includes what was formerly (and currently in type_crossref) labeled as journal-article, proceedings-article, and posted-content. We consider all of these to be article type works, and the distinctions between them to be more about where they are published or hosted:

Journal articles will have a primary_location.source.type of journal

Conference proceedings will have a primary_location.source.type of conference

Preprints or "posted content" will have a primary_location.version of submittedVersion

(Note that distinguishing between journals and conferences is a hard problem, one we often get wrong. We are working on improving this, but we also point out that the two have a lot of overlap in terms of their roles as hosts of research publications.)

Works that are hosted primarily on a preprint, or that are identified speicifically as preprints in the metadata we receive, are assigned the type preprint rather than article.

Works that represent stuff that is about the venue (such as a journal)—rather than a scholarly work properly speaking—have type paratext. These include things like front-covers, back-covers, tables of contents, and the journal itself (e.g., https://openalex.org/W4232230324).

We also have types for letter , editorial , erratum (corrections), libguides , supplementary-materials , and review (currently, articles that come from journals that exclusively publish review articles). Coverage is low on these but will improve.

Other work types follow the Crossref "type" controlled vocabulary—see type_crossref.

type_crossrefString: Legacy type information, using Crossref's "type" controlled vocabulary.

These are the work types that we used to use, before switching to our current system (see type).

You can see all possible values of Crossref's "type" controlled vocabulary via the Crossref api here: https://api.crossref.org/types.

Where possible, we just pass along Crossref's type value for each work. When that's impossible (eg the work isn't in Crossref), we do our best to figure out the type ourselves.

updated_dateString: The last time anything in this Work object changed, expressed as an ISO 8601 date string (in UTC). This date is updated for any change at all, including increases in various counts.

OpenAccess objectThe OpenAccess object describes access options for a given work. It's only found as part of the Work object.

any_repository_has_fulltextBoolean: True if any of this work's locations has location.is_oa=true and location.source.type=repository.

Use case: researchers want to track Green OA, using a definition of "any repository hosts this." OpenAlex's definition (as used in oa_status) doesn't support this, because as soon as there's a publisher-hosted copy (bronze, hybrid, or gold), oa_status is set to that publisher-hosted status.

So there's a lot of repository-hosted content that the oa_status can't tell you about. Our State of OA paper calls this "shadowed Green." This feature makes it possible to track shadowed Green.

is_oaBoolean: True if this work is Open Access (OA).

There are many ways to define OA. OpenAlex uses a broad definition: having a URL where you can read the fulltext of this work without needing to pay money or log in. You can use the locations and oa_status fields to narrow your results further, accommodating any definition of OA you like.

oa_statusString: The Open Access (OA) status of this work. Possible values are:

gold: Published in a fully OA journal.

green: Toll-access on the publisher landing page, but there is a free copy in an OA repository.

hybrid: Free under an open license in a toll-access journal.

bronze: Free to read on the publisher landing page, but without any identifiable license.

closed: All other articles.

oa_urlString: The best Open Access (OA) URL for this work.

Although there are many ways to define OA, in this context an OA URL is one where you can read the fulltext of this work without needing to pay money or log in. The "best" such URL is the one closest to the version of record.

This URL might be a direct link to a PDF, or it might be to a landing page that links to the free PDF

Query the OpenAlex dataset using the magic of The Internet

If you open these examples in a web browser, they will look much better if you have a browser plug-in such as JSONVue installed.

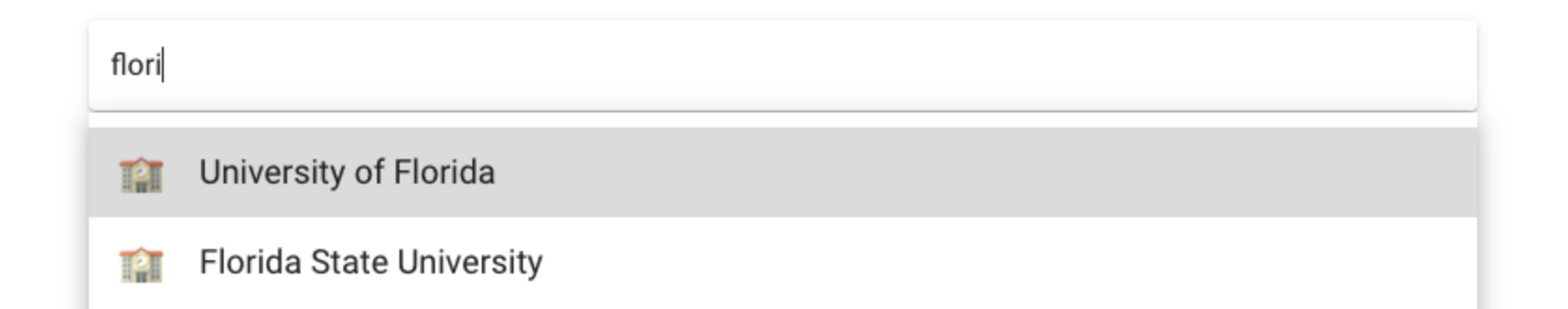

You can use the institutions endpoint to learn about universities and research centers. OpenAlex has a powerful search feature that searches across 108,000 institutions.

Lets use it to search for Stanford University:

Find Stanford University

https://api.openalex.org/institutions?search=stanford

Our first result looks correct (yeah!):

We can use the ID https://openalex.org/I97018004 in that result to find out more.

Show works where at least one author is associated with Stanford University

https://api.openalex.org/works?filter=institutions.id:https://openalex.org/I97018004

This is just one of the 50+ ways that you can filter works!

Right now the list shows records for all years. Lets narrow it down to works that were published between 2010 to 2020, and sort from newest to oldest.

Show works with publication years 2010 to 2020, associated with Stanford University https://api.openalex.org/works?filter=institutions.id:https://openalex.org/I97018004,publication_year:2010-2020&sort=publication_date:desc

Finally, you can group our result by publication year to get our final result, which is the number of articles produced by Stanford, by year from 2010 to 2020. There are more than 30 ways to group records in OpenAlex, including by publisher, journal, and open access status.

That gives a result like this:

Jump into an area of OpenAlex that interests you:

And check out our tutorials page for some hands-on examples!

OpenAlex is:

Big — We have about twice the coverage of the other services, and have significantly better coverage of non-English works and works from the Global South.

Easy — Our service is fast, modern, and well-documented.

Open — Our complete dataset is free under the CC0 license, which allows for transparency and reuse.

Priem, J., Piwowar, H., & Orr, R. (2022). OpenAlex: A fully-open index of scholarly works, authors, venues, institutions, and concepts. ArXiv. https://arxiv.org/abs/2205.01833

Learn more about the OpenAlex entities:

The Location object describes the location of a given work. It's only found as part of the Work object.

There are three places in the Work object where you can find locations:

is_acceptedis_oaBoolean: True if an Open Access (OA) version of this work is available at this location.

is_publishedString: The landing page URL for this location.

The concept of a source is meant to capture a certain social relationship between the host organization and a version of a work. When an organization puts the work on the internet, there is an understanding that they have, at some level, endorsed the work. This level varies, and can be very different depending on the source!

String: A URL where you can find this location as a PDF.

publishedVersion: The document’s version of record. This is the most authoritative version.

acceptedVersion: The document after having completed peer review and being officially accepted for publication. It will lack publisher formatting, but the content should be interchangeable with the that of the publishedVersion.

submittedVersion: the document as submitted to the publisher by the authors, but before peer-review. Its content may differ significantly from that of the accepted article.

It's easy to get a work from from the API with: /works/<entity_id> Here's an example:

You can look up works using external IDs such as a DOI:

You can use the full ID or a shorter Uniform Resource Name (URN) format like so:

Available external IDs for works are:

You must make sure that the ID(s) you supply are valid and correct. If an ID you request is incorrect, you will get no result. If you request an illegal ID—such as one containing a , or &, the query will fail and you will get a 403 error.

affiliationsList: List of objects

authorauthor_positionString: A summarized description of this author's position in the work's author list. Possible values are first, middle, and last.

It's not strictly necessary, because author order is already implicitly recorded by the list order of Authorship objects; however it's useful in some contexts to have this as a categorical value.

countriesList: The country or countries for this author.

We determine the countries using a combination of matched institutions and parsing of the raw affiliation strings, so we can have this information for some authors even if we do not have a specific institutional affiliation.

institutionsis_correspondingBoolean: If true, this is a corresponding author for this work.

This is a new feature, and the information may be missing for many works. We are working on this, and coverage will improve soon.

raw_affiliation_stringsList: This author's affiliation as it originally came to us (on a webpage or in an API), as a list of raw unformatted strings. If there is only one affiliation, it will be a list of length one.

raw_author_nameString: This author's name as it originally came to us (on a webpage or in an API), as a raw unformatted string.

Lets use the OpenAlex API to get journal articles and books published by authors at Stanford University. We'll limit our search to articles published between 2010 and 2020. Since OpenAlex is free and openly available, these examples work without any login or account creation.

The works endpoint contains over 240 million articles, books, and theses . We can filter to show works associated with Stanford.

There you have it! This same technique can be applied to hundreds of questions around scholarly data. The data you received is under a CC0 license, so not only did you access it easily, you can share it freely!

is a fully open catalog of the global research system. It's named after the and made by the nonprofit .

This is the technical documentation for OpenAlex, including the and the . Here, you can learn how to set up your code to access OpenAlex's data. If you want to explore the data as a human, you may be more interested in .

The OpenAlex dataset describes scholarly and how those entities are connected to each other. Types of entities include , , , , , , and .

Together, these make a huge web (or more technically, heterogeneous directed ) of hundreds of millions of entities and billions of connections between them all.

Learn more at our general help center article:

We offer a fast, modern REST API to get OpenAlex data programmatically. It's free and requires no authentication. The daily limit for API calls is 100,000 requests per user per day. For best performance, to all API requests, like mailto=example@domain.com.

There is also a complete database snapshot available to download.

The API has a limit of 100,000 calls per day, and the snapshot is updated monthly. If you need a higher limit, or more frequent updates, please look into

The web interface for OpenAlex, built directly on top of the API, is the quickest and easiest way to .

OpenAlex offers an open replacement for industry-standard scientific knowledge bases like Elsevier's Scopus and Clarivate's Web of Science. these paywalled services, OpenAlex offers significant advantages in terms of inclusivity, affordability, and avaliability.

Many people and organizations have already found great value using OpenAlex. Have a look at the to hear what they've said!

For tech support and bug reports, please visit our . You can also join the , and follow us on and .

If you use OpenAlex in research, please cite :

The OpenAlex dataset describes scholarly entities and how those entities are connected to each other. Together, these make a huge web (or more technically, heterogeneous directed ) of hundreds of millions of entities and billions of connections between them all.

: Scholarly documents like journal articles, books, datasets, and theses

: People who create works

: Where works are hosted (such as journals, conferences, and repositories)

: Universities and other organizations to which authors claim affiliations

: Topics assigned to works

: Companies and organizations that distribute works

: Organizations that fund research

: Where things are in the world

Locations are meant to capture the way that a work exists in different versions. So, for example, a work may have a version that has been peer-reviewed and published in a journal (the ). This would be one of the work's locations. It may have another version available on a preprint server like —this version having been posted before it was accepted for publication. This would be another one of the work's locations.

Below is an example of a work in OpenAlex () that has multiple locations with different properties. The version of record, published in a peer-reviewed journal, is listed first, and is not open-access. The second location is a university repository, where one can find an open-access copy of the published version of the work. Other locations are listed below.

Locations are meant to cover anywhere that a given work can be found. This can include journals, proceedings, institutional repositories, and subject-area repositories like and . If you are only interested in a certain one of these (like journal), you can use a to specify the locations.source.type. ()

: The best (closest to the ) copy of this work.

: The best available open access location of this work.

: A list of all of the locations where this work lives. This will include the two locations above if availabe, and can also include other locations.

Boolean: true if this location's is either acceptedVersion or publishedVersion; otherwise false.

There are . OpenAlex uses a broad definition: having a URL where you can read the fulltext of this work without needing to pay money or log in.

Boolean: true if this location's is publishedVersion; otherwise false.

String: The location's publishing license. This can be a license such as cc0 or cc-by, a publisher-specific license, or null which means we are not able to determine a license for this location.

Object: Information about the source of this location, as a object.

String: The version of the work, based on the Possible values are:.

Get the work with the W2741809807:

That will return a object, describing everything OpenAlex knows about the work with that ID.

You can make up to 50 of these queries at once by requesting a list of entities and filtering on IDs ().

Get the work with this DOI: https://doi.org/10.7717/peerj.4375:

Get the work with PubMed ID: https://pubmed.ncbi.nlm.nih.gov/14907713:

You can use select to limit the fields that are returned in a work object. More details are .

Display only the id and display_name for a work object

The Authorship object represents a single author and her institutional affiliations in the context of a given work. It is only found as part of a Work object, in the property.

Each institutional affiliation that this author has claimed will be listed here: the raw affiliation string that we found, along with the OpenAlex ID or IDs that we matched it to.

This information will be redundant with below, but is useful if you need to know about what we used to match institutions.

String: An author of this work, as a dehydrated object.

Note that, sometimes, we assign ORCID using , so the ORCID we associate with an author was not necessarily included with this work.

List: The institutional affiliations this author claimed in the context of this work, as objects.

Get all of the works in OpenAlex

You can works and change the default number of results returned with the page and per-page parameters:

Get a second page of results with 50 results per page

You can with the sort parameter:

Sort works by publication year

Continue on to learn how you can and lists of works.

You can use sample to get a random batch of works. Read more about sampling and how to add a seed value .

Get 20 random works

You can use select to limit the fields that are returned in a list of works. More details are .

Display only the id and display_name within works results

DOI

doi

Microsoft Academic Graph (MAG)

mag

PubMed ID (PMID)

pmid

PubMed Central ID (PMCID)

pmcid

Journal articles, books, datasets, and theses

Works are scholarly documents like journal articles, books, datasets, and theses. OpenAlex indexes over 240M works, with about 50,000 added daily. You can access a work in the OpenAlex API like this:

Get a list of OpenAlex works:

https://api.openalex.org/works

That will return a list of Work object, describing everything OpenAlex knows about each work. We collect new works from many sources, including Crossref, PubMed, institutional and discipline-specific repositories (eg, arXiv). Many older works come from the now-defunct Microsoft Academic Graph (MAG).

Works are linked to other works via the referenced_works (outgoing citations), cited_by_api_url (incoming citations), and related_works properties.

Learn more about what you can do with works:

People who create works

Authors are people who create works. You can get an author from the API like this:

Get a list of OpenAlex authors:

https://api.openalex.org/authors

The Canonical External ID for authors is ORCID; only a small percentage of authors have one, but the percentage is higher for more recent works.

Our information about authors comes from MAG, Crossref, PubMed, ORCID, and publisher websites, among other sources. To learn more about how we combine this information to get OpenAlex Authors, see Author Disambiguation.

Authors are linked to works via the works.authorships property.

Learn more about what you can with authors:

N-grams are groups of sequential words that occur in the text of a Work.

Note that while n-grams are derived from the fulltext of a Work, the presence of n-grams for a given Work doesn't imply that the fulltext is available to you, the reader. It only means the fulltext was available to Internet Archive for indexing. Work.open_access is the place to go for information on public fulltext availability.

The n-gram API endpoint is not currently in service. The n-grams are still used on our backend to help power fulltext search. If you have any questions about this, please submit a support ticket.

You can see which works we have full-text for using the has_fulltext filter. This does not necessarily mean that the full text is available to you, dear reader; rather, it means that we have indexed the full text and can use it to help power searches. If you are trying to find the full text for yourself, try looking in open_access.oa_url.

We get access to the full text in one of two ways: either using an open-access PDF, or using N-grams obtained from the Internet Archive. You can learn where a work's full text came from at fulltext_origin.

About 57 million works have n-grams coverage through Internet Archive. OurResearch is the first organization to host this data in a highly usable way, and we are proud to integrate it into OpenAlex!

Curious about n-grams used in search? Browse them all via the API. Highly-cited works and less recent works are more likely to have n-grams, as shown by the coverage charts below:

It's easy to filter works with the filter parameter:

In this example the filter is publication_year and the value is 2020.

/works attribute filters/works convenience filtersabstract.searchText search using abstracts

Value: a search string

authors_countNumber of authors for a work

Value: an Integer

authorships.institutions.continent (alias: institutions.continent)Returns: works where at least one of the author's institutions is in the chosen continent.

authorships.institutions.is_global_south (alias: institutions.is_global_south)Value: a Boolean (true or false)

best_open_versionValue: a String with one of the following values:

any: This means that best_oa_location.version = submittedVersion, acceptedVersion, or publishedVersion

acceptedOrPublished: This means that best_oa_location.version can be acceptedVersion or publishedVersion

published: This means that best_oa_location.version = publishedVersion

cited_bycitesconcepts_countValue: an Integer

default.searchText search across titles, abstracts, and full text of works

Value: a search string

display_name.search (alias: title.search)Text search across titles for works

Value: a search string

from_created_dateValue: a date, formatted as yyyy-mm-dd

from_publication_dateValue: a date, formatted as yyyy-mm-dd

Filtering by publication date is not a reliable way to retrieve recently updated and created works, due to the way publishers assign publication dates. Use from_created_date or from_updated_date to get the latest changes in OpenAlex.

from_updated_datefulltext.searchValue: a search string

We combined some n-grams before storing them in our search database, so querying for an exact phrase using quotes does not always work well.

has_abstractWorks that have an abstract available

Value: a Boolean (true or false)

Returns: works that have or lack an abstract, depending on the given value.

has_doiValue: a Boolean (true or false)

has_oa_accepted_or_published_versionValue: a Boolean (true or false)

has_oa_submitted_versionValue: a Boolean (true or false)

has_orcidValue: a Boolean (true or false)

has_pmcidValue: a Boolean (true or false)

has_pmidValue: a Boolean (true or false)

has_ngrams (DEPRECATED)Works that have n-grams available to enable full-text search in OpenAlex.

Value: a Boolean (true or false)

has_referencesValue: a Boolean (true or false)

journallocations.source.host_institution_lineagelocations.source.publisher_lineagemag_onlyValue: a Boolean (true or false)

Returns: works which came from MAG (Microsoft Academic Graph), and no other data sources.

primary_location.source.has_issnValue: a Boolean (true or false)

primary_location.source.publisher_lineageraw_affiliation_strings.searchThis filter used to be named raw_affiliation_string.search, but it is now raw_affiliation_strings.search (i.e., plural, with an 's').

Value: a search string

related_torepositoryYou can also use this as a group_by to learn things about repositories:

title_and_abstract.searchText search across titles and abstracts for works

Value: a search string

to_created_dateValue: a date, formatted as yyyy-mm-dd

to_publication_dateValue: a date, formatted as yyyy-mm-dd

to_updated_dateversionValue: a String with value publishedVersion, submittedVersion, acceptedVersion, or null

Searching without a middle initial returns names with and without middle initials. So a search for "John Smith" will also return "John W. Smith".

When searching for authors, there is no difference when using the search parameter or the filter display_name.search, since display_name is the only field searched when finding authors.

You can autocomplete authors to create a very fast type-ahead style search function:

This returns a list of authors with their last known affiliated institution as the hint:

The following fields can be searched within works:

Rather than searching for the names of entities related to works—such as authors, institutions, and sources—you need to search by a more unique identifier for that entity, like the OpenAlex ID. This means that there is a 2 step process:

Why can't you do this in just one step? Well, if you use the search term, "NYU," you might end up missing the ones that use the full name "New York University," rather than the initials. Sure, you could try to think of all possible variants and search for all of them, but you might miss some, and you risk putting in search terms that let in works that you're not interested in. Figuring out which works are actually associated with the "NYU" you're interested shouldn't be your responsibility—that's our job! We've done that work for you, so all the relevant works should be associated with one unique ID.

You can autocomplete works to create a very fast type-ahead style search function:

This returns a list of works titles with the author of each work set as the hint:

affiliationsList: List of objects, representing the affiliations this author has claimed in their publications. Each object in the list has two properties:

years: a list of the years in which this author claimed an affiliation with this institution

cited_by_countcounts_by_yearAny works or citations older than ten years old aren't included. Years with zero works and zero citations have been removed so you will need to add those in if you need them.

created_datedisplay_nameString: The name of the author as a single string.

display_name_alternativesList: Other ways that we've found this author's name displayed.

idString: The OpenAlex ID for this author.

idsObject: All the external identifiers that we know about for this author. IDs are expressed as URIs whenever possible. Possible ID types:

twitter (String: this author's Twitter handle)

wikipedia (String: this author's Wikipedia page)

Most authors are missing one or more ID types (either because we don't know the ID, or because it was never assigned). Keys for null IDs are not displayed.

last_known_institution (deprecated)last_known_institutionsorcidCompared to other Canonical IDs, ORCID coverage is relatively low in OpenAlex, because ORCID adoption in the wild has been slow compared with DOI, for example. This is particularly an issue when dealing with older works and authors.

summary_statsObject: Citation metrics for this author

While the 2-year mean citedness is normally a journal-level metric, it can be calculated for any set of papers, so we include it for authors.

updated_dateworks_api_urlString: A URL that will get you a list of all this author's works.

We express this as an API URL (instead of just listing the works themselves) because sometimes an author's publication list is too long to reasonably fit into a single author object.

works_countx_conceptsscore (Float): The strength of association between this author and the listed concept, from 0-100.

Author objectTo see the full list of authors, go to the individual record for the work, which is never truncated.

This affects filtering as well. So if you filter works using an author ID or ROR, you will not receive works where that author is listed further than 100 places down on the list of authors. We plan to change this in the future, so that filtering works as expected.

You can filter authors with the filter parameter:

/authors attribute filtersWant to filter by last_known_institution.display_name? This is a two-step process:

Find the institution.id by searching institutions by display_name.

Filter works by last_known_institution.id.

/authors convenience filtersdefault.searchValue: a search string

display_name.searchValue: a search string

has_orcidValue: a Boolean (true or false)

last_known_institution.continentReturns: authors where where the last known institution is in the chosen continent.

last_known_institution.is_global_southValue: a Boolean (true or false)

You can filter sources with the filter parameter:

/sources attribute filtersWant to filter by host_organization.display_name? This is a two-step process:

Find the host organization's ID by searching by display_name in Publishers or Institutions, depending on which type you are looking for.

Filter works by host_organization.id.

/sources convenience filterscontinentReturns: sources that are associated with the chosen continent.

default.searchValue: a search string

display_name.searchValue: a search string

has_issnValue: a Boolean (true or false)

is_global_southValue: a Boolean (true or false)

Journals and repositories that host works

Sources are where works are hosted. OpenAlex indexes about 249,000 sources. There are several types, including journals, conferences, preprint repositories, and institutional repositories.

Our information about sources comes from Crossref, the ISSN Network, and MAG. These datasets are joined automatically where possible, but there’s also a lot of manual combining involved. We do not curate journals, so any journal that is available in the data sources should make its way into OpenAlex.

Learn more about what you can do with sources:

N-grams list the words and phrases that occur in the full text of a Work. We obtain them from Internet Archive's publicly (and generously ) available General Index and use them to enable fulltext searches on the Works that have them, through both the fulltext.search filter, and as an element of the more holistic search parameter.

Get works where the publication year is 2020

It's best to before trying these out. It will show you how to combine filters and build an AND, OR, or negation query.

You can filter using these attributes of the object (click each one to view their documentation on the Work object page):

The host_venue and alternate_host_venues properties have been deprecated in favor of and . The attributes host_venue and alternate_host_venues are no longer available in the Work object, and trying to access them in filters or group-bys will return an error.

(alias: author.id) — Authors for a work (OpenAlex ID)

(alias: author.orcid) — Authors for a work (ORCID)

(alias: institutions.country_code)

(alias: institutions.id) — Institutions affiliated with the authors of a work (OpenAlex ID)

(alias: institutions.ror) — Institutions affiliated with the authors of a work (ROR ID)

(alias: is_corresponding) — This filter marks whether or not we have corresponding author information for a given work

— The Open Acess license for a work

(alias: concept.id) — The concepts associated with a work

— Corresponding authors for a work (OpenAlex ID)

— The DOI (Digital Object Identifier) of a work

— Award IDs for grants

— Funding organizations linked to grants for a work

(alias: pmid)

(alias: openalex) — The OpenAlex ID for a work

(alias: mag)

(alias: is_oa) — Whether a work is Open Access

(alias: oa_status) — The Open Access status for a work (e.g., gold, green, hybrid, etc.)

Want to filter by the display_name of an associated entity (author, institution, source, etc.)?

These filters aren't attributes of the object, but they're handy for solving some common use cases:

Returns: works whose abstract includes the given string. See the for details on the search algorithm used.

Get works with abstracts that mention "artificial intelligence":

Returns: works with the chosen number of objects (authors). You can use the inequality filter to select a range, such as authors_count:>5.

Get works that have exactly one author

Value: a String with a valid

Get works where at least one author's institution in each work is located in Europe

Returns: works where at least one of the author's institutions is in the Global South ().

Get works where at least one author's institution is in the Global South

Returns: works that meet the above criteria for .

Get works whose best_oa_location is a submitted, accepted, or published version: ``

Value: the for a given work

Returns: works found in the given work's section. You can think of this as outgoing citations.

Get works cited by :

Value: the for a given work

Returns: works that cite the given work. This is works that have the given OpenAlex ID in the section. You can think of this as incoming citations.

Get works that cite : ``

The number of results returned by this filter may be slightly higher than the work'sdue to a timing lag in updating that field.

Returns: works with the chosen number of .

Get works with at least three concepts assigned

This works the same as using the for Works.

Returns: works whose (title) includes the given string; see the for details.

Get works with titles that mention the word "wombat":

For most cases, you should use the parameter instead of this filter, because it uses a better search algorithm and searches over abstracts as well as titles.

Returns: works with greater than or equal to the given date.

This field requires an

Get works created on or after January 12th, 2023 (does not work without valid API key):

Returns: works with greater than or equal to the given date.

Get works published on or after March 14th, 2001:

Value: a date, formatted as an date or date-time string (for example: "2020-05-17", "2020-05-17T15:30", or "2020-01-02T00:22:35.180390").

Returns: works with greater than or equal to the given date.

This field requires an

Get works updated on or after January 12th, 2023 (does not work without valid API key):

Learn more about using this filter to get the freshest data possible with our .

Returns: works whose fulltext includes the given string. Fulltext search is available for a subset of works, obtained either from PDFs or , see for more details.

Get works with fulltext that mention "climate change":

Get the works that have abstracts:

Returns: works that have or lack a DOI, depending on the given value. It's especially useful for .

Get the works that have no DOI assigned: ``

Returns: works with at least one of the has = true and is acceptedVersion or publishedVersion. For Works that undergo peer review, like journal articles, this means there is a peer-reviewed OA copy somewhere. For some items, like books, a published version doesn't imply peer review, so they aren't quite synonymous.

Get works with an OA accepted or published copy

Returns: works with at least one of the has = true and is submittedVersion. This is useful for finding works with preprints deposited somewhere.

Get works with an OA submitted copy: ``

Returns: if true it returns works where at least one author or has an . If false, it returns works where no authors have an ORCID ID. This is based on the orcid field within . Note that, sometimes, we assign ORCID using , so this does not necessarily mean that the work itself has ORCID information.

Get the works where at least one author has an ORCID ID:

Returns: works that have or lack a PubMed Central identifier () depending on the given value.

Get the works that have a pmcid:

``

Returns: works that have or lack a PubMed identifier (), depending on the given value.

Get the works that have a pmid:

``

This filter has been deprecated. See instead: .

Returns: works for which n-grams are available or unavailable, depending on the given value. N-grams power fulltext searches through the filter and the parameter.

Get the works that have n-grams:

Returns: works that have or lack , depending on the given value.

Get the works that have references:

Value: the for a given , where the source is

Returns: works where the chosen is the .

Value: the for an

Returns: works where the given institution ID is in

Get the works that have https://openalex.org/I205783295 in their host_organization_lineage:

Value: the for a

Returns: works where the given publisher ID is in

Get the works that have https://openalex.org/P4310320547 in their publisher_lineage:

MAG was a project by Microsoft Research to catalog all of the scholarly content on the internet. After it was discontinued in 2021, OpenAlex built upon the data MAG had accumulated, connecting and expanding it using . The methods that MAG used to identify and aggregate scholarly content were quite different from most of our other sources, and so the content inherited from MAG, especially works that we did not connect with data from other sources, can look different from other works. While it's great to have these MAG-only works available, you may not always want to include them in your results or analyses. This filter allows you to include or exclude any works that came from MAG and only MAG.

Get all MAG-only works:

Returns: works where the has at least one ISSN assigned.

Get the works that have an ISSN within the primary location:

Value: the for a

Returns: works where the given publisher ID is in

Get the works that have https://openalex.org/P4310320547 in their publisher_lineage:

Returns: works that have at least one which includes the given string. See the for details on the search algorithm used.

Get works with the words Department of Political Science, University of Amsterdam somewhere in at least one author's raw_affiliation_strings:

Value: the for a given work

Returns: works found in the given work's section.

Get works related to :

Value: the for a given , where the source is

Returns: works where the chosen exists within the .

You can use this to find works where authors are associated with your university, but the work is not part of the university's repository.

Get works that are available in the University of Michigan Deep Blue repository (OpenAlex ID: https://openalex.org/S4306400393)

Get works where at least one author is associated with the University of Michigan, but the works are not found in the University of Michigan Deep Blue repository

Learn which repositories have the most open access works

Returns: works whose (title) or abstract includes the given string; see the for details.

Get works with title or abstract mentioning "gum disease":

Returns: works with less than or equal to the given date.

This field requires an

Get works created on or after January 12th, 2023 (does not work without valid API key):

Returns: works with less than or equal to the given date.

Get works published on or before March 14th, 2001:

Value: a date, formatted as an date or date-time string (for example: "2020-05-17", "2020-05-17T15:30", or "2020-01-02T00:22:35.180390").

Returns: works with less than or equal to the given date.

This field requires an

Get works updated before or on January 12th, 2023 (does not work without valid API key):

Returns: works where the chosen version exists within the . If null, it returns works where no version is found in any of the locations.

Get works where a published version is available in at least one of the locations:

Get the author with the A5023888391:

That will return an object, describing everything OpenAlex knows about the author with that ID:

You can make up to 50 of these queries at once by .

Get the author with this ORCID: https://orcid.org/0000-0002-1298-3089:

You can use the full ID or a shorter Uniform Resource Name (URN) format like so:

You can use select to limit the fields that are returned in an author object. More details are .

Display only the id and display_name and orcid for an author object

Get counts of works by Open Access status:

It's best to before trying these out. It will show you how results are formatted, the number of results returned, and how to sort results.

The host_venue and alternate_host_venues properties have been deprecated in favor of and . The attributes host_venue and alternate_host_venues are no longer available in the Work object, and trying to access them in filters or group-bys will return an error.

(alias author.id)

(alias author.orcid)

(alias institutions.country_code)

(alias institutions.continent)

(alias institutions.id)

(alias institutions.ror)

(alias institutions.type)

(alias: is_corresponding): this marks whether or not we have corresponding author information for a given work

(DEPRECATED)

(alias is_oa)

(alias oa_status)

The best way to search for authors is to use the search query parameter, which searches the and the fields. Example:

Get works with the author name "Carl Sagan":

Names with diacritics are flexible as well. So a search for David Tarrago can return David Tarragó, and a search for David Tarragó can return David Tarrago. When searching with a diacritic, diacritic versions of the names are prioritized in order to honor the original form of the author's name. Read more about our handling of diacritics .

You can read more in the in the API Guide. It will show you how relevance score is calculated, how words are stemmed to improve search results, and how to do complex boolean searches.

You can also use search as a , by appending .search to the end of the property you are filtering for:

Get authors with the name "john smith" in the display_name:

You can also use the filter default.search, which works the same as using the .

Autocomplete authors with "ronald sw" in the display name:

Read more about .

The best way to search for works is to use the search query parameter, which searches across , , and . Example:

Get works with search term "dna" in the title, abstract, or fulltext:

Fulltext search is available for a subset of works, see for more details.

You can read more about search . It will show you how relevance score is calculated, how words are stemmed to improve search results, and how to do complex boolean searches.

You can use search as a , allowing you to fine-tune the fields you're searching over. To do this, you append .search to the end of the property you are filtering for:

Get works with "cubist" in the title:

You can also use the filter default.search, which works the same as using the .

These searches make use of stemming and stop-word removal. You can disable this for searches on titles and abstracts. Learn how to do this .

Find the ID of the related entity. For example, if you're interested in works associated with NYU, you could search the /institutions endpoint for that name: . Looking at the first result, you'll see that the OpenAlex ID for NYU is I57206974.

Use a with the /works endpoint to get all of the works: .

Autocomplete works with "tigers" in the title:

Read more about .

When you use the API to get a or , this is what's returned.

institution: a object

Integer: The total number that cite a work this author has created.

List: and for each of the last ten years, binned by year. To put it another way: each year, you can see how many works this author published, and how many times they got cited.

String: The date this Author object was created in the OpenAlex dataset, expressed as an date string.

openalex (String: this author's . Same as )

orcid (String: this author's . Same as )

scopus (String: this author's )

This field has been deprecated. Its replacement is .

List: List of Institution objects. This author's last known institutional affiliations. In this context "last known" means that we took all the author's , sorted them by publication date, and selected the most recent one. If there is only one affiliated institution for this author for the work, this will be a list of length 1; if there are multiple affiliations, they will all be included in the list.

Each item in the list is a object, and you can find more documentation on the page.

String: The for this author. ORCID is a global and unique ID for authors. This is the for authors.

2yr_mean_citedness Float: The 2-year mean citedness for this source. Also known as . We use the year prior to the current year for the citations (the numerator) and the two years prior to that for the citation-receiving publications (the denominator).

h_index Integer: The for this author.

i10_index Integer: The for this author.

String: The last time anything in this author object changed, expressed as an date string. This date is updated for any change at all, including increases in various counts.

Integer: The number of this this author has created.

This is updated a couple times per day. So the count may be slightly different than what's in works when viewed .

x_concepts will be deprecated and removed soon. We will be replacing this functionality with instead.

List: The concepts most frequently applied to works created by this author. Each is represented as a object, with one additional attribute:

The DehydratedAuthor is stripped-down object, with most of its properties removed to save weight. Its only remaining properties are:

When retrieving a list of works in the API, the authorships list within each work will be cut off at 100 authorships objects in order to keep things running well. When this happens the boolean value is_authors_truncated will be available and set to true. This affects a small portion of OpenAlex, as there are around 35,000 works with more than 100 authors. This limitation does not apply to the .

Example list of works with truncated authors

Work with all 249 authors available

Get authors that have an ORCID

It's best to before trying these out. It will show you how to combine filters and build an AND, OR, or negation query.

You can filter using these attributes of the Author entity object (click each one to view their documentation on the object page):

(alias: openalex)

(the author's scopus ID, as an integer)

(accepts float, null, !null, can use range queries such as < >)

(accepts integer, null, !null, can use range queries)

(accepts integer, null, !null, can use range queries)

(alias: concepts.id or concept.id) -- will be deprecated soon

To learn more about why we do it this way,

These filters aren't attributes of the , but they're included to address some common use cases:

This works the same as using the for Authors.

Returns: Authors whose contains the given string; see the for details.

Get authors named "tupolev":

Returns: authors that have or lack an , depending on the given value.

Get the authors that have an ORCID: ``

Value: a String with a valid

Get authors where the last known institution is located in Africa

Returns: works where at least one of the author's institutions is in the .

Get authors where the last known institution is located in the Global South

Get counts of authors by the last known institution continent: ``

It's best to before trying these out. It will show you how results are formatted, the number of results returned, and how to sort results.

Get all authors in OpenAlex

By default we return 25 results per page. You can change this default and through works with the per-page and page parameters:

Get the second page of authors results, with 50 results returned per page

You also can with the sort parameter:

Sort authors by cited by count, descending

Continue on to learn how you can and lists of authors.

You can use sample to get a random batch of authors. Read more about sampling and how to add a seed value .

Get 25 random authors

You can use select to limit the fields that are returned in a list of authors. More details are .

Display only the id and display_name and orcid within authors results

Get sources that have an ISSN

It's best to before trying these out. It will show you how to combine filters and build an AND, OR, or negation query

You can filter using these attributes of the Source entity object (click each one to view their documentation on the object page):

(alias: host_organization.id)

— Use this with a publisher ID to find works from that publisher and all of its children.

(alias: openalex)

— Requires exact match. Use the filter instead if you want to find works from a publisher and all of its children.

(accepts float, null, !null, can use range queries such as < >)

(accepts integer, null, !null, can use range queries)

(accepts integer, null, !null, can use range queries)

(alias: concepts.id or concept.id) -- will be deprecated soon

To learn more about why we do it this way,

These filters aren't attributes of the object, but they're included to address some common use cases:

Value: a String with a valid

Get sources that are associated with Asia

This works the same as using the for Sources.

Returns: sources with a containing the given string; see the for details.

Get sources with names containing "Neurology": ``

In most cases, you should use the parameter instead of this filter because it uses a better search algorithm.

Returns: sources that have or lack an , depending on the given value.

Get sources without ISSNs: ``

Returns: sources that are associated with the .

Get sources that are located in the Global South

We have created a page in our help docs to give you all the information you need about our author disambiguation including information about author IDs, how we disambiguate authors, and how you can curate your author profile. Go to to find out what you need to know!

Get the source with the S137773608:

That will return an object, describing everything OpenAlex knows about the source with that ID:

You can make up to 50 of these queries at once by .

Get the source with ISSN: 2041-1723:

You can use select to limit the fields that are returned in a source object. More details are .

Display only the id and display_name for a source object

Get a list of OpenAlex sources:

The for sources is ISSN-L, which is a special "main" ISSN assigned to every sources (sources tend to have multiple ISSNs). About 90% of sources in OpenAlex have an ISSN-L or ISSN.

Several sources may host the same work. OpenAlex reports both the primary host source (generally wherever the lives), and alternate host sources (like preprint repositories).

Sources are linked to works via the and properties.

Check out the , a Jupyter notebook showing how to use Python and the API to learn about all of the sources in a country.

ORCID

orcid

Scopus

scopus

twitter

Wikipedia

wikipedia

fulltext via n-grams

ISSN

issn

Fatcat

fatcat

Microsoft Academic Graph (MAG)

mag

Wikidata

wikidata

It's easy to get an institution from from the API with: /institutions/<entity_id>. Here's an example:

Get the institution with the OpenAlex ID I27837315:

https://api.openalex.org/institutions/I27837315

That will return an Institution object, describing everything OpenAlex knows about the institution with that ID:

You can make up to 50 of these queries at once by requesting a list of entities and filtering on IDs using OR syntax.

You can look up institutions using external IDs such as a ROR ID:

Get the institution with ROR ID https://ror.org/00cvxb145:

https://api.openalex.org/institutions/ror:https://ror.org/00cvxb145

Available external IDs for institutions are:

ROR

ror

Microsoft Academic Graph (MAG)

mag

Wikidata

wikidata

You can use select to limit the fields that are returned in an institution object. More details are here.

Display only the id and display_name for an institution object

https://api.openalex.org/institutions/I27837315?select=id,display_name

These are the fields in an institution object. When you use the API to get a single institution or lists of institutions, this is what's returned.

associated_institutionsList: Institutions related to this one. Each associated institution is represented as a dehydrated Institution object, with one extra property:

relationship (String): The type of relationship between this institution and the listed institution. Possible values: parent, child, and related.

Institution associations and the relationship vocabulary come from ROR's relationships.

cited_by_countInteger: The total number Works that cite a work created by an author affiliated with this institution. Or less formally: the number of citations this institution has collected.

country_codeString: The country where this institution is located, represented as an ISO two-letter country code.

counts_by_yearList: works_count and cited_by_count for each of the last ten years, binned by year. To put it another way: each year, you can see how many new works this institution put out, and how many times any work affiliated with this institution got cited.

Years with zero citations and zero works have been removed so you will need to add those in if you need them.

created_dateString: The date this Institution object was created in the OpenAlex dataset, expressed as an ISO 8601 date string.

display_nameString: The primary name of the institution.

display_name_acronymsList: Acronyms or initialisms that people sometimes use instead of the full display_name.

display_name_alternativesList: Other names people may use for this institution.

geoObject: A bunch of stuff we know about the location of this institution:

city (String): The city where this institution lives.

geonames_city_id (String): The city where this institution lives, as a GeoNames database ID.

region (String): The sub-national region (state, province) where this institution lives.

country_code (String): The country where this institution lives, represented as an ISO two-letter country code.

country (String): The country where this institution lives.

latitude (Float): Does what it says.

longitude (Float): Does what it says.

homepage_urlString: The URL for institution's primary homepage.

idString: The OpenAlex ID for this institution.

idsObject: All the external identifiers that we know about for this institution. IDs are expressed as URIs whenever possible. Possible ID types:

mag (Integer: this institution's Microsoft Academic Graph ID)

openalex (String: this institution's OpenAlex ID. Same as Institution.id)

ror (String: this institution's ROR ID. Same as Institution.ror)

wikipedia (String: this institution's Wikipedia page URL)

wikidata (String: this institution's Wikidata ID)

Many institution are missing one or more ID types (either because we don't know the ID, or because it was never assigned). Keys for null IDs are not displayed.

image_thumbnail_urlString: Same as image_url, but it's a smaller image.

is_super_systemBoolean: True if this institution is a "super system". This includes large university systems such as the University of California System (https://openalex.org/I2803209242), as well as some governments and multinational companies.

We have this special flag for these institutions so that we can exclude them from other institutions' lineage, which we do because these super systems are not generally relevant in group-by results when you're looking at ranked lists of institutions.

The list of institution IDs marked as super systems can be found in this file.

image_urlString: URL where you can get an image representing this institution. Usually this is hosted on Wikipedia, and usually it's a seal or logo.

internationalObject: The institution's display name in different languages. Derived from the wikipedia page for the institution in the given language.

display_name (Object)

key (String): language code in wikidata language code format. Full list of languages is here.

value (String): display_name in the given language

lineageList: OpenAlex IDs of institutions. The list will include this institution's ID, as well as any parent institutions. If this institution has no parent institutions, this list will only contain its own ID.

This information comes from ROR's relationships, specifically the Parent/Child relationships.

Super systems are excluded from the lineage. See is_super_system above.

repositoriesList: Repositories (Sources with type: repository) that have this institution as their host_organization

rolesList: List of role objects, which include the role (one of institution, funder, or publisher), the id (OpenAlex ID), and the works_count.

In many cases, a single organization does not fit neatly into one role. For example, Yale University is a single organization that is a research university, funds research studies, and publishes an academic journal. The roles property links the OpenAlex entities together for a single organization, and includes counts for the works associated with each role.

The roles list of an entity (Funder, Publisher, or Institution) always includes itself. In the case where an organization only has one role, the roles will be a list of length one, with itself as the only item.

rorString: The ROR ID for this institution. This is the Canonical External ID for institutions.

The ROR (Research Organization Registry) identifier is a globally unique ID for research organization. ROR is the successor to GRiD, which is no longer being updated.

summary_statsObject: Citation metrics for this institution

2yr_mean_citedness Float: The 2-year mean citedness for this source. Also known as impact factor. We use the year prior to the current year for the citations (the numerator) and the two years prior to that for the citation-receiving publications (the denominator).

h_index Integer: The h-index for this institution.

i10_index Integer: The i-10 index for this institution.

While the h-index and the i-10 index are normally author-level metrics and the 2-year mean citedness is normally a journal-level metric, they can be calculated for any set of papers, so we include them for institutions.

typeString: The institution's primary type, using the ROR "type" controlled vocabulary.

Possible values are: Education, Healthcare, Company, Archive, Nonprofit, Government, Facility, and Other.

updated_dateString: The last time anything in this Institution changed, expressed as an ISO 8601 date string. This date is updated for any change at all, including increases in various counts.

works_api_urlString: A URL that will get you a list of all the Works affiliated with this institution.

We express this as an API URL (instead of just listing the Works themselves) because most institutions have way too many works to reasonably fit into a single return object.

works_countInteger: The number of Works created by authors affiliated with this institution. Or less formally: the number of works coming out of this institution.

x_conceptsx_concepts will be deprecated and removed soon. We will be replacing this functionality with Topics instead.

List: The Concepts most frequently applied to works affiliated with this institution. Each is represented as a dehydrated Concept object, with one additional attribute:

score (Float): The strength of association between this institution and the listed concept, from 0-100.

DehydratedInstitution objectThe DehydratedInstitution is a stripped-down Institution object, with most of its properties removed to save weight. Its only remaining properties are:

These are the fields in a source object. When you use the API to get a single source or lists of sources, this is what's returned.

String: An abbreviated title obtained from the ISSN Centre.

Array: Alternate titles for this source, as obtained from the ISSN Centre and individual work records, like Crossref DOIs, that carry the source name as a string. These are commonly abbreviations or translations of the source's canonical name.

List: List of objects, each with price (Integer) and currency (String).

Article processing charge information, taken directly from DOAJ.

Integer: The source's article processing charge in US Dollars, if available from DOAJ.

The apc_usd value is calculated by taking the APC price (see apc_prices) with a currency of USD if it is available. If it's not available, we convert the first available value from apc_prices into USD, using recent exchange rates.

cited_by_countInteger: The total number of Works that cite a Work hosted in this source.

country_codeString: The country that this source is associated with, represented as an ISO two-letter country code.

counts_by_yearList: works_count and cited_by_count for each of the last ten years, binned by year. To put it another way: each year, you can see how many new works this source started hosting, and how many times any work in this source got cited.

If the source was founded less than ten years ago, there will naturally be fewer than ten years in this list. Years with zero citations and zero works have been removed so you will need to add those in if you need them.

created_dateString: The date this Source object was created in the OpenAlex dataset, expressed as an ISO 8601 date string.

display_nameString: The name of the source.

homepage_urlString: The starting page for navigating the contents of this source; the homepage for this source's website.

host_organizationString: The host organization for this source as an OpenAlex ID. This will be an Institution.id if the source is a repository, and a Publisher.id if the source is a journal, conference, or eBook platform (based on the type field).

host_organization_lineageList: OpenAlex IDs — See Publisher.lineage. This will only be included if the host_organization is a publisher (and not if the host_organization is an institution).

host_organization_nameString: The display_name from the host_organization, shown for convenience.

idString: The OpenAlex ID for this source.

idsObject: All the external identifiers that we know about for this source. IDs are expressed as URIs whenever possible. Possible ID types:

fatcat (String: this source's Fatcat ID)